flink yarn 启动

在本机已经安装了 hadoop 的前提下,根据官方文档:https://ci.apache.org/projects/flink/flink-docs-release-1.5/ops/deployment/yarn_setup.html

使用命令:./bin/yarn-session.sh -n 4 -jm 1024 -tm 4096

就可以启动,坑爹的是一直报错

org.apache.flink.configuration.IllegalConfigurationException: The number of virtual cores per node were configured with 1 but Yarn onl y has -1 virtual cores available. Please note that the number of virtual cores is set to the number of task slots by default unless configured in the Flink config with 'yarn.containers.vcores.'搜了半天,没找到,看来是没人报这个错了。猜测应该是 yarn 的配置问题,google 了一下 yarn vcores 配置,果然找到,https://mapr.com/blog/best-practices-yarn-resource-management/

在 yarn-site.xml 文件中可配:

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>5</value>

</property>修改并重启 yarn,以上错误消失,但是 The environment variable 'FLINK_LIB_DIR' is set to '/home/wangzhongfu/flink-1.5.0/lib' but the directory doesn't exist.

可能的原因是,hadoop 运行在 win 上,而 flink yarn 命令又是 sh 脚本,在 cygwin 上运行,所以设置目录有些分裂。

唉,不折腾了,准备装乌班图虚拟机。

未完待续......

2018-06-23 补充

虚拟机不玩了,太卡了,在测试环境上yarn启动,一样是上面的错误,启动日志发现 flink 竟然是在连接 0.0.0.0:8032,明显是 hadoop 配置没有找到。设置一下环境变量:

export HADOOP_CONF_DIR=/your path/flink/hadoop-2.4.1/etc/hadoop

启动没有 vcores 错误。

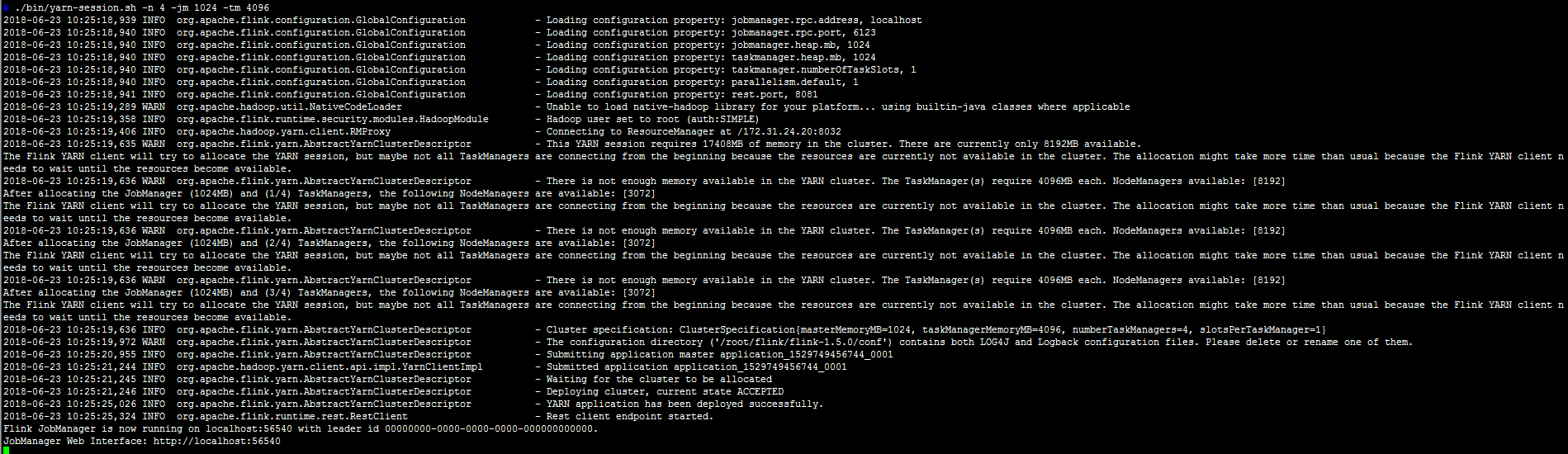

然后:运行了一百遍的命令:./bin/yarn-session.sh -n 4 -jm 1024 -tm 4096

还是不成功,2.4.1 版本的 hadoop 在 64 位操作系统运行有问题,继续换成 2.8.4,启动又有问题

the Flink Yarn cluster has failed.

2018-06-23 10:18:33,156 ERROR org.apache.flink.client.program.rest.RestClusterClient - Error while shutting down cluster

java.util.concurrent.ExecutionException: org.apache.flink.runtime.concurrent.FutureUtils$RetryException: Could not complete the operation. Number of retries has been exhausted.查看 yarn 界面发现:

is running beyond virtual memory limits. Current usage: 337.8 MB of 1 GB physical memory used; 2.3 GB of 2.1 GB virtual memory used. Killing container.卧槽,内存不足。修改 yarn 配置文件:

参考:https://stackoverflow.com/questions/43441437/container-is-running-beyond-virtual-memory-limits

mapper-site.sml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>8192</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>8192</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>4096</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>4096</value>

</property>

</configuration>加入内存的配置(好奇 hadoop 默认的不能大一点吗)

yarn-site.sml

<configuration>

<!-- Site specific YARN configuration properties yarn.resourcemanager.webapp.address-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>dashuju-prod-web-172031024020.aws:18088</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>172.31.24.20:8032</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>30</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>dashuju-prod-web-172031024020.aws:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resouce-tracker.address</name>

<value>dashuju-prod-web-172031024020.aws:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>dashuju-prod-web-172031024020.aws:8033</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8192</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>8192</value>

</property>

</configuration>vcores 数量与 maximum-allocation-mb 和 yarn.scheduler.minimum-allocation-mb 两个参数有关,相除吧:

重启 yarn,然后运行flink:./bin/yarn-session.sh -n 4 -jm 1024 -tm 4096

FINALLY!!!!

启动日志中有 flink web 管理控制台的地址:http://52.202.56.79:56540,动态生成的,每次都是不一样的

终于成功了,下一篇:flink yarn run job,and next:flink yarn HA。

补充一点,hadoop ssh 端口默认为 22,通过命令:export HADOOP_SSH_OPTS="-p 20002 -o ConnectTimeout=1 -o SendEnv=HADOOP_CONF_DIR" 修改。

参考:http://blog.dongjinleekr.com/changing-port-no-of-hadoop-cluster/

2018-06-25

如果想让 flink 后台运行,在启动命令上加 -d

./bin/yarn-session.sh -n 4 -jm 1024 -tm 4096 -d

n 为 taskmanager 数量,每个 tm 内存为 4G

run example

./yarn application -kill application_1529749456744_0001

./bin/flink run ./examples/batch/WordCount.jar --input hdfs:///user/root/LICENSE-2.0.txt

./bin/yarn-session.sh -id application_1497192989423_0002

直接在yarn上提交作业

./bin/flink run -m yarn-cluster -yn 2 ./examples/batch/WordCount.jar --input hdfs:///user/root/LICENSE-2.0.txt

2019-06-13

yarn 模式部署时,在 yarn 集群重启时(顺序单节点重启),不会影响到在 yarn 集群中的 flink streaming 任务(前提是开启 checkpoint)。